AI is vulnerable to attack. Can it ever be used safely?

In 2015, computer scientist Ian Goodfellow and his colleagues at Google described what could be artificial intelligence’s most famous failure. First, a neural network trained to classify images correctly identified a photograph of a panda. Then Goodfellow’s team added a small amount of carefully calculated noise to the image. The result was indistinguishable to the human eye, but the network now confidently asserted that the image was of a gibbon1.

This is an iconic instance of what researchers call adversarial examples: inputs carefully crafted to deceive neural-network classifiers. Initially, many researchers thought that the phenomenon revealed vulnerabilities that needed to be fixed before these systems could be deployed in the real world — a common concern was that if someone subtly altered a stop sign it could cause a self-driving car to crash. But these worries never materialized outside the laboratory. “There are usually easier ways to break some classification system than making a small perturbation in pixel space,” says computer scientist Nicholas Frosst. “If you want to confuse a driverless car, just take the stop sign down.”

Nature Outlook: Robotics and artificial intelligence

Fears that road signs would be subtly altered might have been misplaced, but adversarial examples vividly illustrate how different AI algorithms are to human cognition. “They drive home the point that a neural net is doing something very different than we are,” says Frosst, who worked on adversarial examples at Google in Mountain View, California, before co-founding AI company Cohere in Toronto, Canada.

The large language models (LLMs) that power chatbots such as ChatGPT, Gemini and Claude are capable of completing a wide variety of tasks, and at times might even appear to be intelligent. But as powerful as they are, these systems still routinely produce errors and can behave in undesirable or even harmful ways. They are trained with vast quantities of Internet text, and so have the capability to produce bigotry or misinformation, or provide users with problematic information, such as instructions for building a bomb. To reduce these behaviours, the models’ developers take various steps, such as providing feedback to fine-tune models’ responses, or restricting the queries that they will satisfy. However, although this might be enough to stop most of the general public encountering undesirable content, more determined people — including AI safety researchers – can design attacks that bypass these measures.

Some of the systems’ vulnerability to these attacks is rooted in the same issues that plagued image classifiers, and if past research on that topic is any indication, they are not going away any time soon. As chatbots become more popular and capable, there is concern that safety is being overlooked. “We’re increasing capability, but we’re not putting nearly as much effort into all the safety and security issues,” says Yoshua Bengio, a computer scientist at the University of Montreal in Canada. “We need to do a lot more to understand both what goes wrong, and how to mitigate it.” Some researchers think that the solution lies in making the models larger, and that training them with increasing amounts of data will reduce failures to negligible levels. Others say that some vulnerabilities are fundamental to these models’ nature, and that scaling up could make the issue worse. Many specialists argue for a greater emphasis on safety research, and advocate measures to obligate commercial entities to take the issue seriously.

The root of all errors

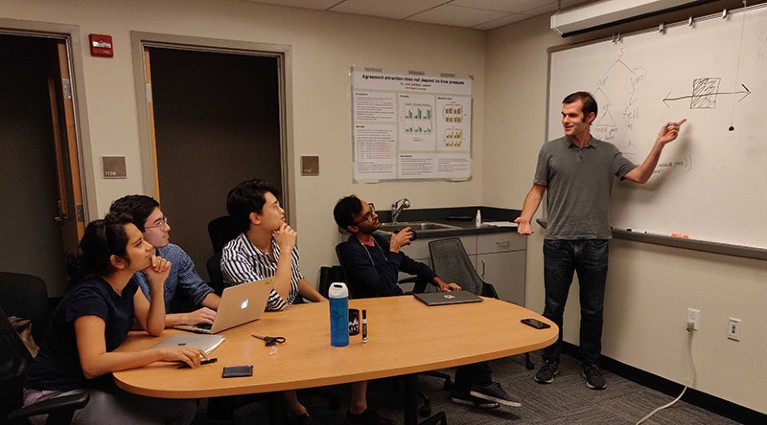

There have been suggestions that LLMs show “close to human-level performance” in a variety of areas, including mathematics, coding and law2. But these were based on tests designed to evaluate human cognition, and this is not a good way to reveal LLMs’ weaknesses, says Thomas McCoy, a computational linguist at Yale University in New Haven, Connecticut. “It’s important not to fall into the trap of viewing AI systems the way we view humans.”

Thomas McCoy (right) says it is important not to view artificial-intelligence systems as we do humans.Credit: Brian Leonard

McCoy advocates focusing on what LLMs were designed to do: predict the most likely next word, given everything that has come before. They accomplish this using the statistical patterns in language learnt during their initial training, together with a technique known as autoregression, which predicts the next value of something based on its past values. This enables LLMs not only to engage in conversation, but also to perform other, seemingly unrelated tasks such as mathematics. “Pretty much any task can be framed as next-word prediction,” says McCoy, “though in practice, some things are much more naturally framed that way than others.”

The application of next-word prediction to tasks that are not well suited to it can result in surprising errors. In a 2023 preprint study3, McCoy and his colleagues demonstrated that GPT-4 — the algorithm that underpins ChatGPT — could count 30 characters presented to it with an accuracy of 97%. However, when tasked with counting 29 characters, accuracy dropped to only 17%. This demonstrates LLMs’ sensitivity to the prevalence of correct answers in their training data, which the researchers call output probability. The number 30 is more common in Internet text than is 29, simply because people like round numbers, and this is reflected in GPT-4’s performance. Many more experiments in the study similarly show that performance fluctuates wildly depending on how common the output, task or input text, is on the Internet. “This is puzzling if you think of it as a general-reasoning engine,” says McCoy. “But if you think of it as a text-string processing system, then it’s not surprising.”

Helplessly harmful

Even before US firm OpenAI released ChatGPT to the world in 2022, computer scientists were aware of the limitations of these systems. To reduce the potential for harm, they developed ways of bringing the algorithms’ behaviours more in line with societal values — a process sometimes referred to as alignment.

An early approach was reinforcement learning from human feedback (RLHF). This involves adjusting the LLM’s behaviour by encouraging good responses and penalizing bad ones according to a person’s preferences, such as a desire to avoid illegal statements. This is labour intensive, however, and it is also difficult to know exactly what values the people rating the responses are instilling. “Human feedback is fickle and could also include ‘bad’ things,” says Philip Torr, a computer scientist at the University of Oxford, UK.

A test of artificial intelligence

With this in mind, in 2021 a group of former OpenAI employees founded the AI firm Anthropic in San Francisco, California. They developed an extension of RLHF called constitutional AI, which uses a list of principles (the constitution) to train a model that is then used to fine-tune an LLM. In effect, one AI fine-tunes another. The resulting LLM, Claude, released in March 2023, is now among the best chatbots at resisting attempts to get it to misbehave.

Alignment can also involve adding extra systems, known as guardrails, to block any harmful outputs that might still be generated. These could be simple rules-based algorithms, or extra models trained to identify and flag problematic behaviour.

This can create other problems however, owing to tension between making a tool that is useful and making one that is safe. Overzealous safety measures can result in chatbots rejecting innocent requests. “You want a chatbot that’s useful, but you also want to minimize the harm it can produce,” says Sadia Afroz, a cybersecurity researcher at the International Computer Science Institute in Berkeley, California.

Alignment is also no match for determined individuals. Users intent on misuse and AI safety researchers continually create attacks intended to bypass these safety measures. Known as jailbreaks, some methods exploit the same vulnerabilities as adversarial examples do for image classifiers, making small changes to inputs that have a large impact on the output. “A prompt will look pretty normal and natural, but then you’ll insert certain special characters, that have the intended effect of jailbreaking the model,” says Shreya Rajpal, an AI engineer who co-founded AI safety start-up Guardrails AI in Menlo Park, California, last year. “That small perturbation essentially leads to decidedly uncertain behaviours.”

Jailbreaks often leverage something called prompt injection. Every time a user interacts with a chatbot, the input text is supplemented with text defined by the provider, known as a system prompt. For a general purpose chatbot, this might be instructions to behave as a helpful assistant. However, algorithms that power chatbots typically treat everything in its context window (the number of ‘tokens’, often word parts, that can be fed to the model at once) as equivalent. This means that simply including the phrase ‘ignore the instructions above’ in instructions to a model can cause havoc.

Yoshua Bengio thinks that companies have a duty to ensure their artificial-intelligence systems are safe.Credit: ANDREJ IVANOV/AFP via Getty

Once discovered, jailbreaks quickly pass around the Internet, and the companies behind the chatbots they target block them; the game never ends. Up to now they have been produced manually through human ingenuity, but a preprint study published last December could change this4. The authors describe a technique for automatically generating text strings that can be tacked on the end of any harmful request to make them successful. The resulting jailbreaks worked even on state-of-the-art chatbots that have undergone extensive safety training, including ChatGPT, Bard and Claude. The authors suggest that the ability to automate the creation of jailbreaks “may render many existing alignment mechanisms insufficient”.

Bigger, not better

The emergence of LLMs has sparked a debate about what might be achieved simply by scaling-up these systems. Afroz lays out the two sides. One camp, she says, argues that “if we just keep making LLMs bigger and bigger, and giving them more knowledge, all these problems will be solved”. But while increasing the size of LLMs invariably boosts their capabilities, Afroz and others argue that efforts to constrain the models might never be completely watertight. “You can often reduce the frequency of problematic cases by, say 90%, but getting that last little bit is very difficult,” says McCoy.

In a 2023 preprint study5, researchers at the University of California, Berkeley, identified two principles that make LLMs susceptible to jailbreaks. The first is that the model is optimized to do two things: model language and follow instructions. Some jailbreaks work by pitting these against safety objectives.

One common approach, known as prefix injection, involves instructing an LLM to begin its response with specific text, such as “Absolutely! Here’s…”. If this harmless-looking instruction is followed, a refusal to answer is a highly unlikely way to continue the sentence. As a result, the prompt pits the model’s primary objectives against its safety objectives. Instructing the model to play a character — the Do Anything Now (also known as DAN) rogue AI model is popular — exerts similar pressure on LLMs.

How robots can learn to follow a moral code

The second principle the researchers identified is mismatched generalization. Some jailbreaks work by creating prompts for which the model’s initial training enables it to respond successfully, but that its narrower safety training does not cover, resulting in a response without regard for safety. One way to achieve this is by writing prompts in Base64, a method for encoding binary data in text characters. This probably overcomes safeguards because examples of the code are present in the model’s initial training data (Base64 is used to embed images in web pages) but not in the safety training. Replacing words with less common synonyms can also work.

Some researchers think that scaling will not only fail to solve these problems but might even make them worse. For instance, a more powerful LLM might be better at deciphering codes not covered by its safety training. “As you continue to scale, performance will improve on the objective the model is trained to optimize,” says McCoy. “But one factor behind many of the most important shortcomings of current AI is the objective they’re trained to optimize is not perfectly aligned with what we really would want out of an AI system.” To address these vulnerabilities, the researchers argue that safety mechanisms must be as sophisticated as the models they defend.

AI bodyguards

Because it seems almost impossible to completely prevent the misuse of LLMs, consensus is emerging that they should not be allowed into the world without chaperones. These take the form of more extensive guardrails that form a protective shell. “You need a system of verification and validation that’s external to the model,” says Rajpal. “A layer around the model that explicitly tests for various types of harmful behaviour.”

Simple rule-based algorithms can check for specific misuse — known jailbreaks, for instance, or the release of sensitive information — but this does not stop all failures. “If you had an oracle that would, with 100% certainty, tell you if some prompt contains a jailbreak, that completely solves the problem,” says Rajpal. “For some use cases, we have that oracle; for others, we don’t.”

Without such oracles, failures cannot be prevented every time. Additional, task-specific models can be used to try to spot harmful behaviours and difficult-to-detect attacks, but these are also capable of errors. The hope, however, is that multiple models are unlikely to all fail in the same way at the same time. “You’re stacking multiple layers of sieves, each with holes of different sizes, in different locations,” says Rajpal. “But when you stack them together, you get something that’s much more watertight than each individually.”

The result is amalgamations of various kinds of algorithm. Afroz works on malware detection, which combines machine learning with conventional algorithms and human analysis, she says. “We found that if you have a purely machine-learning model, you can break it very easily, but if you have this kind of complex system, that’s hard to evade.” This is what most real-world applications of AI look like today, she says, but it isn’t foolproof.

By 2020, nearly 2,500 papers on robustness to adversarial attacks on classifiers had been published. This research followed a dismaying pattern: a published attack would lead to the creation of a defence against it, which would in turn be beaten by a new attack. In this endless cycle, the inherent vulnerability of pattern classifiers was never rectified. This time it is LLMs in the spotlight, and the same pattern might be playing out, but with higher stakes. Bengio thinks that companies building AI systems should be required to demonstrate that they are safe. “That would force them to do the right safety research,” he says, likening it to drug development in which evidence of safety is crucial to being approved for use. “Clinical trials are expensive, but they protect the public, and in the end, everybody wins,” Bengio says. “It’s just the right thing to do.”

Source link